Publication

Presentation slides on Harvard IQSS Final Report on Harvard IQSS

Credit

Collaborators: Dorice Moylan, Sarah AlMahmoud

Instructor: Rong Tang

Presentation slides on Harvard IQSS Final Report on Harvard IQSS

Credit

Collaborators: Dorice Moylan, Sarah AlMahmoud

Instructor: Rong Tang

My team, consisting of three graduate students, did a usability testing project for the Institute for Quantitative Social Science (IQSS) at Harvard University. We worked with a site supervisor from IQSS who is responsible for usability of the website.

The results and recommendations are presented to practitioners including the director of IQSS Data Science. Recommendations from the research are applied on the website.

Backgrounds

With the rise of big data trends, computational social science field has emerged. Researchers in this area study human behavior using computational methods to analyze and collect data, which is regarded as a paradigm change in social science studies. There have been several tools to support the researchers, but user study or usability testing for them has not been conducted.

Process

1. Usability instruments development

After meeting with a site supervisor to understand objectives and requirements of the research, we analyzed the website using knowledge from the meeting and transaction data of the website. Grounded on the results, we developed a persona and a scenario. Six main task and sub tasks for each main task were built.

![]()

2. User testing

We conducted usability testing with 4 participants in a well-equipped usability lab--with a user room and an observer room with an one-sided mirror--at Simmons College. Techsmith Morae software was used to record and analyze data. Each session included preassigned tasks and conducting pre- and post- test interviews.

3. Data analysis

After the testing sessions, both quantitative and qualitative data are analyzed to measure effectiveness, efficiency, and satisfaction.

![]()

4. Heuristic evaluation

Heuristic evaluation was also conducted to supplement the results. The 10 cognitive design principles developed by Gerhardt-Powals(1996) was used. Eight new problems which are not shown in the usability testing are found.

Problems Identified

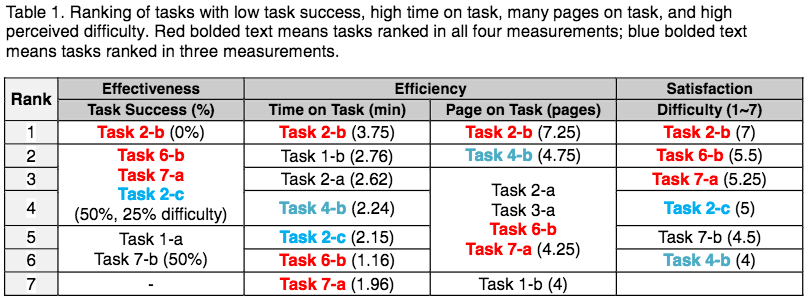

Through the user testing and the heuristic evaluation, we found 18 problems as shown in the table below. Problem codes are as A/B/C/D: A.Terminology or Label; B. Information or Contents Organization; C. Amount of Information; D.Interface Design.

![]()

The most severe three problems highlighted with video clips extracted from recordings of testing sessions.

Recommendations

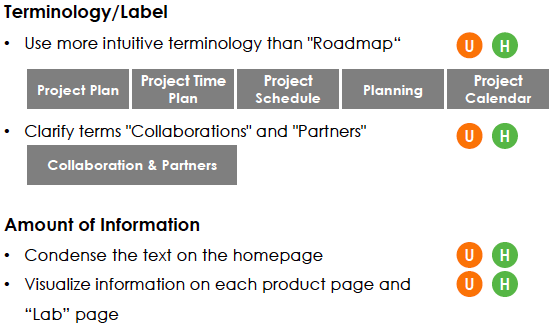

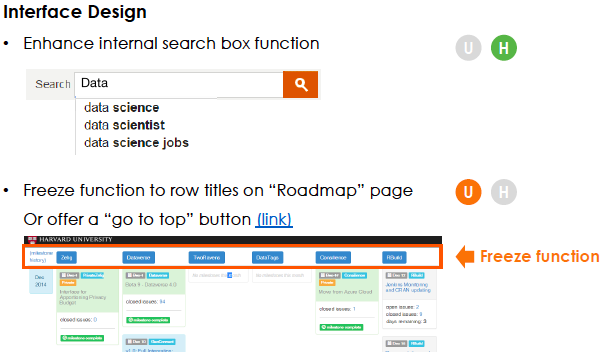

For each category of problems (A/B/C/D), recommendations for each problem was proposed with supportive images, as shown in the following figure.

![]()

![]()

![]()

Final Presentation

The results and recommendations are presented to practitioners including the director of IQSS Data Science. Recommendations from the research are applied on the website.

Backgrounds

With the rise of big data trends, computational social science field has emerged. Researchers in this area study human behavior using computational methods to analyze and collect data, which is regarded as a paradigm change in social science studies. There have been several tools to support the researchers, but user study or usability testing for them has not been conducted.

Process

1. Usability instruments development

After meeting with a site supervisor to understand objectives and requirements of the research, we analyzed the website using knowledge from the meeting and transaction data of the website. Grounded on the results, we developed a persona and a scenario. Six main task and sub tasks for each main task were built.

2. User testing

We conducted usability testing with 4 participants in a well-equipped usability lab--with a user room and an observer room with an one-sided mirror--at Simmons College. Techsmith Morae software was used to record and analyze data. Each session included preassigned tasks and conducting pre- and post- test interviews.

3. Data analysis

After the testing sessions, both quantitative and qualitative data are analyzed to measure effectiveness, efficiency, and satisfaction.

4. Heuristic evaluation

Heuristic evaluation was also conducted to supplement the results. The 10 cognitive design principles developed by Gerhardt-Powals(1996) was used. Eight new problems which are not shown in the usability testing are found.

Problems Identified

Through the user testing and the heuristic evaluation, we found 18 problems as shown in the table below. Problem codes are as A/B/C/D: A.Terminology or Label; B. Information or Contents Organization; C. Amount of Information; D.Interface Design.

The most severe three problems highlighted with video clips extracted from recordings of testing sessions.

Recommendations

For each category of problems (A/B/C/D), recommendations for each problem was proposed with supportive images, as shown in the following figure.

Final Presentation